Border checkpoints are turning into testbeds for AI. As traveller numbers climb and cargo lines stretch longer each year, governments are leaning on algorithms to spot threats faster than human teams ever could. But the moment AI enters a space where a single misclassification can deny someone entry — or miss a genuine risk — the stakes change.

This post looks at what it really takes to deploy AI in border security: the key requirements, risk management approaches, and use cases already in production.

The State of AI Adoption in Border Security

AI at the border is no longer a futuristic concept — it’s a present-day reality quietly reshaping how nations screen travellers and cargo. The US Homeland Security, Canada Border Services Agency, the EU border agencies, along with several nations across Africa and APAC deploying AI systems to keep pace with rising traveller volumes and expanding cargo flows.

75 of the 176 countries globally are already using AI solutions for homeland and border security.

Carnegie’s AI Global Surveillance (AIGS) Index

Key use cases include:

- Real-time video analytics for border surveillance. Border zones generate more data than any human team can realistically monitor — which is why agencies are turning to algorithms as their first line of detection. Modern ML and DL models can sift through thousands of live feeds at once, flagging anomalies like unusual movement, loitering, or emerging smuggling patterns. Instead of staring at screens for hours, officers now get targeted alerts on incidents that actually warrant attention.

- Biometric identity verification at control points. Manual passport stamping is rapidly giving way to automated biometric checks. In the EU, the Entry/Exit System (EES), launched on 12 October 2025, now records facial images and fingerprints of non-EU travellers to match them against previous entries and exits. Australia and New Zealand’s new SmartGate systems push the same idea further — high-throughput identity verification designed to cut queues, reduce human error, and speed up processing at busy airports.

- AI-assisted traveller screening. Border agencies are leaning on predictive analytics to separate routine travellers and cargo from higher-risk cases. Tools like Germany’s RADAR-iTE apply standardized behavioural and historical data to assess the likelihood of politically motivated violent acts among individuals already known to authorities. Instead of depending solely on manual pattern recognition, officers get structured, data-driven risk assessments that help focus attention where it matters most.

- AI-powered surveillance in remote terrain. Large stretches of land and maritime borders remain difficult — and costly — to patrol. AI-enabled surveillance platforms now fill those gaps with a combination of video analytics, ground sensors, and UAVs. A recent Frontex–Bulgarian Border Police trial exemplifies this shift: drone footage, infrared and daylight cameras, and smaller tactical UAV inputs were fused into a single GIS-driven operational picture. The result is real-time situational awareness over terrain where human patrols can’t always be present.

Why AI Adoption At the Border is Accelerating

Several systemic pressures are pushing border agencies toward automation and speeding up adoption faster than policymakers anticipated.

Travel volumes are on the rise

International travel has bounced back to pre-pandemic levels. Europe alone saw 625 million tourist arrivals between January and September 2025, while irregular migration flows on key Mediterranean routes continue to oscillate.

In the U.S., enforcement encounters along the Southwest border still number in the hundreds of thousands annually. Although the numbers have been progressively going down since the new administration entered the White House. With more people on the move (legally and illegally), border forces are facing mounting pressures to keep screening processes both fast and precise.

Cargo volumes are climbing sharply

Global ports and airports are facing steady YoY increases in cargo. In the first eight months of 2025, container volumes hit 126.75 million TEUs — a 4% jump. EU air cargo demand followed the same trend, rising 4.1% YoY in October 2025. More cargo means more inspections, more paperwork, and more opportunity for illicit shipments to slip through without automated support.

Criminal activity rises with volume

Greater movement of people and goods comes with greater exploitation. Canada Border Services Agency (CBSA) opened 70 human-smuggling cases in 2025 — set to break the five-year average. The EU recorded over 50,000 illegal crossings on the Central Mediterranean route alone. Traditional workflows struggle to keep pace with this scale, especially when most teams operate at short capacity.

Chronic staffing shortages

Border agencies across the U.S. and Europe report the same story: not enough personnel to manage the workload. AI is stepping in not as a replacement, but as a force-multiplying infrastructure that helps small teams manage disproportionately large operational burdens.

Interoperable systems finally make AI feasible

The EU’s integration of SIS, EES, and related databases signals the arrival of the technical backbone AI needs: real-time data exchange, standardized formats, and fewer information silos. With cleaner inputs, models produce fewer false positives and more reliable assessments. Similar modernization efforts are underway across APAC, Africa, and the Americas.

These drivers have moved AI from a “nice to explore” technology to essential infrastructure. One that has, in fact, already brought its proven operational advantages.

Advantages of AI Border Security Solutions

The pressures driving AI adoption are structural. The advantages it offers are equally systemic.

AI border security solutions enhance situational awareness, accelerate screening, and standardize decisions across vast and complex operational landscapes.

Real-Time Situational Awareness at Scale

Border security is a real-time mission. Conditions shift quickly, and threats emerge without warning. Human teams can’t monitor everything, everywhere, all at once without some help from the algorithms.

AI-powered video analytics tools can interpret thousands of live feeds and highlight only the activity that truly matters. For instance, iSentry from IntelexVision learns what normal movement looks like in each location and filters out the noise. This reduces operator alerts by as much as 90% to 95%. Instead of scanning screens for hours, officers receive clear, verified events that demand attention.

AI also extends coverage into places where traditional patrols struggle, on and offshore. The United States Coast Guard recently tested the V-BAT system from Shield AI to track vessels across wide ocean areas. The drone’s AI-enabled optical sensor can search for targets for more than thirteen hours. In one operation, the V-BAT supported the interdiction of three smuggling vessels in a single night. This shows how AI can expand detection capacity far beyond human limits.

Faster, More Accurate Identity Verification

Identity verification is one of the most time-sensitive steps at any border. Machine learning and deep learning have significantly raised the accuracy of automated biometric matching for facial recognition, fingerprints, and document analysis, which helps officers process travellers quickly without lowering security standards.

For example, the EU’s new ETIAS system uses ML to create algorithmic traveler profiles based on predefined risk indicators. It approves most applicants within a few seconds. This frees human officers to focus on complex cases while giving low-risk travellers a smoother entry experience.

Some airports are moving even faster. Dubai’s AI-powered passenger corridor allows up to ten people to pass through at once by simply walking along a designated path. Facial recognition and pre-registered biometric data confirm each traveller’s identity in as little as fourteen seconds. This removes the need for passport counters or smart gates and reduces bottlenecks in high-traffic terminals. The system builds on Dubai’s earlier “smart tunnel” and now verifies passenger data before travellers reach a checkpoint. Suspicious cases are escalated to human experts, which keeps oversight intact while dramatically increasing throughput.

Operational Efficiency and Cost Reduction

AI helps border agencies operate with greater precision and far less administrative overhead. It reduces false positives, streamlines manual reviews, and handles the repetitive, high-volume tasks that consume most officer hours. Identity checks, video analysis, and document verification become automated workflows rather than continuous manual effort. This allows human teams to focus on the work that genuinely requires judgment.

Live rollouts show how much efficiency gains matter. Edvantis supported a security technology provider in building an automated driver-cabin identification system that activates scanning frames only when needed and identifies vehicles instantly. This reduces unnecessary scans and speeds up throughput while improving accuracy.

Other innovations deliver similar impacts. Thermal Radar, a network-based thermal surveillance and detection solution, replaces numerous fixed cameras with a single AI-enhanced thermal unit. The system offers a 360-degree view of the terrain and near-instant intrusion detection. With fewer devices needed to cover the terrain, Thermal Radar claims it costs 70% less to operate.

Improved Decision Precision

Human judgment can be undermined by fatigue, bias, and information gaps. Well-trained machine learning models, in contrast, perform with greater consistency, no matter the scale. They can also surface overlooked patterns and anomalies that deserve closer investigation.

By putting decision-assistance engines in the loop, officers move from piecemeal information to a complete operational picture and their decisions reflect it. For instance, Canada has piloted machine-learning tools across immigration, border control, and refugee workflows.

One automated system sorts visitor record applications into routine and non-routine categories before they reach an officer. The tool also completes basic clerical tasks such as populating form fields and organizing application materials. According to the government, the expected impact is faster processing and quicker decisions for applicants.

Key Considerations When Deploying AI for Border Security

Just like any other software system, AI isn’t without its flaws. For border forces, the stakes are even higher as the algorithms influence decisions with legal, operational, and human consequences.

To ensure that the new AI system adds value (and not magnifies risks), you should take into account the following considerations.

High False Positives and False Negatives Rates

AI models are robust, but imperfect. Some have been known to produce high false alert rates, especially when poorly trained or applied to complex human behavior.

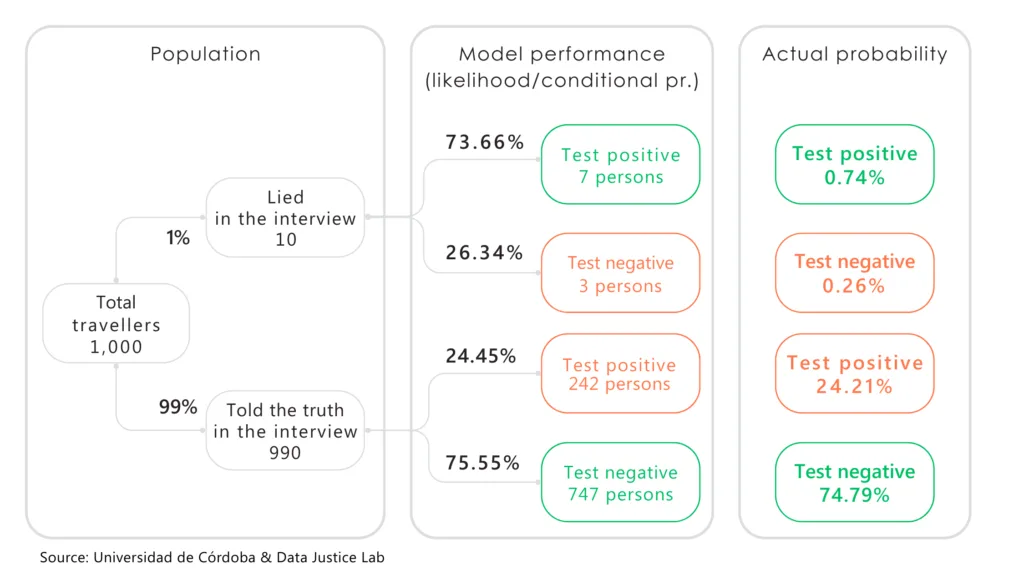

The EU’s emotion recognition project (iBorderCtrl), which was tested in Hungary, Greece, and Latvia between 2016 and 2019, is one example of things going rogue. The tool attempted to detect deception by analyzing facial micro-expressions. In practice, it produced very low accuracy rates and flagged individuals incorrectly in most cases. This showed how fragile certain AI methods can be and how easily misinterpretations can escalate into operational or ethical problems when used at border crossings.

iBorderCtrl’s likelihood to provide correct scores

Source: Universidad de Córdoba & Data Justice Lab

That said, not all AI use cases for border security face the same challenge. Image recognition, object detection, and movement analytics now sit among the AI domains where false positive rates are rather low. For example, traditional video verification systems still overwhelm operators with alerts. AI-powered platforms have reduced false alerts by 94% in controlled tests.

To prevent high false positives, always test the models against real operational conditions. Validate model performance on diverse datasets, perform bias and error audits, and refine algorithms continuously rather than treat deployment as a one-time event. High-risk decisions should route through a human reviewer by default, especially when confidence scores fall below predefined thresholds.

You should also document each model’s decision logic and measure field performance over time against metrics like:

- False positive rate (FPR) and false negative rate

- Precision

- Recall

- Confidence score distribution

This creates a feedback loop that strengthens accuracy and gives teams confidence that AI systems support, rather than undermine, their operational goals.

Unified Data Management Practices

Data is the key to making fair decisions. Most agencies have immigration records, police databases, customs systems, airline passenger data, maritime logs, and visa platforms to refer to at any time. But the wrinkle is that these systems rarely “talk” to each other.

For a human, a lack of software interoperability means more tab switching. But for AI algorithms, siloed databases mean potential bias since they cannot form a complete operational picture. This fragmentation limits the accuracy of risk assessments, slows down screenings, and can result in major errors.

To deploy reliable AI models, agencies (and their partners) will need to adopt:

- Harmonized data schemas

- Shared data classification standards

- Secure APIs that support real-time data exchange

- Consistent rules for data collection, retention, and access control

Having robust audit trails is equally important. Agencies must be able to track who accessed what data and for what purpose. This becomes essential when sensitive biometric data is involved.

Model Explainability

Some AI models obscure how decisions were made. Facial recognition systems are especially prone to opaque logic. The decision-making approach is difficult to interpret, and their performance varies across demographic groups. For that, facial recognition systems at borders have been heavily criticised by regulators and advocacy groups.

Model explainability is not simply good practice. It is a legal requirement in some regions. GDPR grants individuals the right to understand automated decisions that affect them, and the EU AI Act classifies most border-control use cases as “high-risk.” Meaning all AI border security solutions must come with clear documentation of how models work, what data they use, and how outputs should be interpreted.

Implementing explainability requires a structured and proactive approach. Our AI development team recommends the following:

- Choose interpretable model architectures (e.g., decision trees, naïve Bayes classifiers, linear regression, or k-nearest neighbors). For more complex use cases, you can go with a hybrid approach, where a deep learning model first performs feature extraction from the underlying data and an interpretable module explains the final decision with feature importance. XAI frameworks like LIME and SHAP are great options for that.

- Create and maintain “model cards” that describe training datasets, known limitations, and intended use cases for each developed model. Share these with your workforce to help them better understand the conditions under which a model performs well or poorly.

- Avoid training the model for binary outputs only. Your system should present outputs with context, like confidence scores, feature importance summaries, and uncertainty indicators. Effectively, indicating why a traveller or cargo item was flagged. This supports better oversight and reduces the risk of uncritical acceptance of algorithmic decisions.

- Run periodic bias audits. Benchmark the model performance across different traveler groups to compare demographic performance, error rates, and false positives and false negatives.

With these elements in place, explainability becomes a safeguard that protects both operational integrity and fundamental rights.

Human-in-the-Loop & Oversight Mechanisms

AI may process information faster than any officer, but only humans can weigh its consequences.

Border-control decisions can determine whether someone gains entry, pursues asylum, or even stays safe — outcomes too serious to hand over entirely to an algorithm. When officers lean too heavily on AI, there’s a danger that a misclassification or misinterpretation slips through unquestioned. For this reason, an effective model oversight process should have the following features:

- Clear intervention thresholds that signal when a human must review the case.

- Structured human review protocols for flagged travelers or cargo, especially in situations where the stakes are high.

- Escalation workflows for cases when model confidence is low and extra support is required.

- Oversight logs must store every model decision and every human override to support audits and investigations.

With these controls, you can ensure decision quality and transparency for all AI-assisted solutions.

Privacy-Preserving Design

Border agencies manage some of the most sensitive data in the public sector — biometrics, travel histories, identity documents, behavioural profiles, and algorithmic risk scores. One leak can jeopardize an individual’s safety, compromise investigations, and undermine public trust. So privacy controls must be embedded at the architectural level.

The optimal approach is to create a model around the privacy-by-design principle (which is also part of GDPR compliance). Essentially, this means designing systems so that personal data exposure is minimized at each stage of the pipeline — collections, storage, processing, inferences, and deletion.

At the architectural level, this requires:

- Data minimization rules. Hard-coded constraints in the ingestion layer ensure the model only receives the fields genuinely required for its task. A passport-validity check, for example, doesn’t need an entire travel history — and the system should be designed to reflect that.

- Purpose limitation. Each dataset and feature is tied to a single, clearly defined, auditable use case. This prevents secondary or unauthorized reuse and reduces the risk of accidental disclosures through model behaviour or interference.

- Built-in anonymization and tokenization. Identifiers that don’t need to remain identifiable are anonymized or tokenized by default. This significantly lowers the chance of re-identification if data is compromised.

- Secure model-training workflows. Training data must be encrypted both at rest and in transit, with model artifacts fully versioned to enable traceability and rapid rollback if a privacy concern emerges.

- Automatic retention and deletion controls must be enforced by the system, not left to manual cleanup. Sensitive data is removed as soon as its legal and operational purpose expires, preventing unnecessary long-term storage.

Lastly, models should be monitored for inadvertent memorization of identifiable data (a known vulnerability in deep learning), and training should favour techniques that reduce this risk — such as regularization, gradient clipping, or differential privacy during training. Inference pipelines should avoid exposing raw inputs or internal embeddings that could leak sensitive information.

Conclusion

AI is already reshaping how borders operate, but its impact depends entirely on how deliberately it’s deployed. Models must undergo rigorous evaluation for error rates, decision logic, and data safety. Human oversight and explainability mechanisms should be baked in from day one, too, to avoid any cost faux-pas.

To ensure smooth and compliant deployment of AI for border security, you need to build the right foundations, and Edvantis can help with that. Our AI engineering team has substantial experience in developing large-scale data analytics, computer vision, and image recognition models — now running live in production with all the necessary security, privacy, and explainability guardrails. Contact us to learn more about our service lines.