In three short years, generative AI has already reached high maturity rates across a number of knowledge-based workflows — software engineering, document drafting, customer service automation, contract generation, and more. Over three-quarters of organizations now use AI, representing a 55% increase from the previous year.

However, most generative AI use cases have been focused on digital workflows — and not as much on automating more complex industrial processes. A recent survey from MIT NADA found that companies operating in the asset-heavy sectors are more focused on early experimentation and secluded pilots of foundation models. Respectively, the structural impacts have been rather limited.

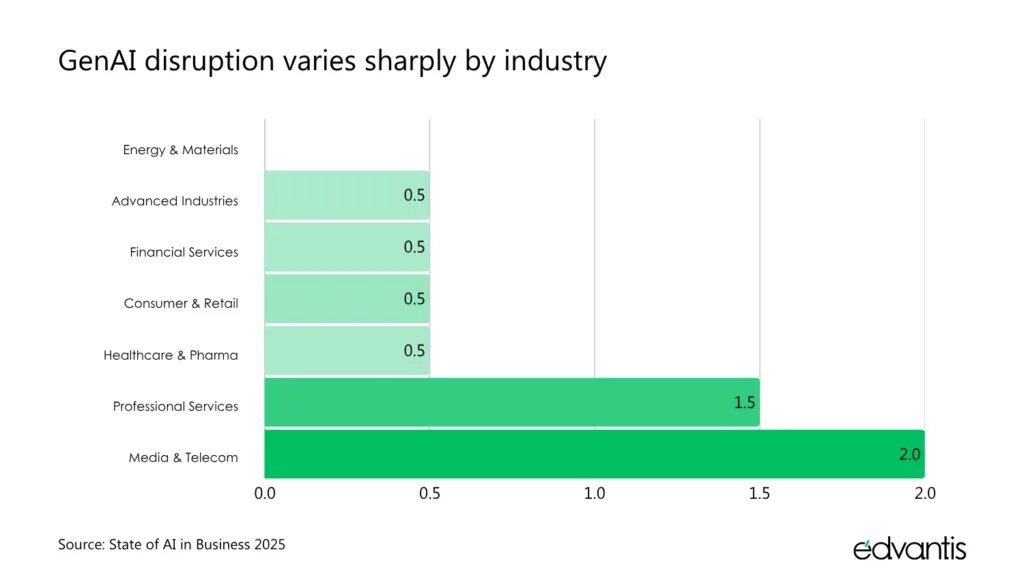

Source: State of AI in Business 2025

This begs the question: are foundation models technologically ready to handle the complexity of physical operations, or are industrial leaders simply exercising caution? Here’s our take.

Industrial Foundation Models: The State of Play

Foundation models mark a new generation of industrial AI — large neural networks pre-trained on vast datasets and capable of generating language, visuals, and even machine control logic. Built on architectures like transformers, GANs, and variational autoencoders, these models provide the backbone for generative and predictive intelligence across domains.

Large language models (LLMs), in particular, have proven effective at reasoning across general knowledge. But the open-source versions rarely suit complex, physics-based environments like manufacturing or logistics. That’s where retrieval-augmented generation (RAG) comes in. By connecting LLMs to domain-specific data sources — from 3D CAD models and sensor feeds to PLC code and maintenance logs — industrial teams can fine-tune general-purpose models into specialized copilots that understand their unique operational context.

Thanks to RAG, general-purpose language models are evolving into something far more industrially fluent. By learning from both real and synthetic data — the blueprints, sensor readings, and edge cases that define how machines behave — these systems can begin to grasp the logic of the physical world. Such models can simulate factory processes, anticipate outcomes, and adapt in real time.

In doing so, they chip away at one of manufacturing’s most persistent bottlenecks: the need for extensive manual programming. What emerges instead are intelligent robots, adaptive control systems, and production lines that can, quite literally, think on their feet.

The Benefits of Deploying Foundation Models in Manufacturing

Industrial AI is moving from promise to proof — with foundation models generating double-digit improvements in operating efficiency, quality, and sustainability.

- Better cross-functional decisions. Generative AI tools can help create a common data circulation later across divisions (e.g., IT, OT, and business teams), to speed up decision-making. For example, ABB’s Ability Genix Copilot helps surface data from the shop-floor and enterprise systems through one conversational interface. Instead of manually querying data with complex BI tools, all business units can get real-time, contextualized answers with natural-language querying.

- Optimized operating costs. Intelligent copilots and vision systems bring automation closer to the edge, reducing downtime and operational waste while improving resource utilization. US-based pulp and paper company Georgia-Pacific reported hundreds of millions of dollars in annual value capture through AI initiatives such as ChatGP, a generative assistant for operators, and a vision-based defect detection system.

- Faster engineering cycles. Pre-trained models can streamline engineering work by translating natural language intent into executable code. So that teams can design, test, and validate ideas much faster. With Siemens Industrial Copilot, engineers can use natural language to write PLC and CNC code, automatically generate visualizations and test plans in minutes.

- Higher first-time-right quality and yield. Vision-driven foundation models detect anomalies faster and with greater precision, even when real defect data is limited. Using Amazon SageMaker Studio and Lookout for Vision, the e-Bike Smart Factory improved defect detection accuracy while reducing false negatives across its production line.

- Better sustainability performance. Data-driven process models make it possible to tune industrial systems for maximum efficiency and minimum environmental impact, often simultaneously. For instance, IBM’s process-level foundation model helps optimize energy-intensive operations like cement kilns, achieving up to a 5% productivity increase and 4% reduction in energy consumption and emissions. AI-guided efficiency translates directly into measurable ESG impact.

Adoption Barriers of Industrial AI Solutions

Industrial leaders aren’t short on enthusiasm for generative AI, but turning pilots into production remains an uphill climb. According to Capgemini, 55% of manufacturing leaders are exploring the potential of generative AI, and 45% have moved to pilot projects.

In most cases, scaled foundation model deployments get blocked by the following challenges:

Legacy Data Architectures

The strength of industrial AI depends on the data infrastructure it relies on. Many manufacturing businesses still run on siloed SCADA networks, decades-old MES deployments, and air-gapped historian systems with no easy APIs. GenAI, however, thrives on structured, high-context, and near-real-time data — the kind most operational technology (OT) stacks weren’t designed to deliver. Connecting LLMs to these environments can break certifications, trigger cybersecurity audits, or require costly downtime.

To move forward, leaders are rebuilding their data infrastructure. About 30% of industrial companies have begun modernizing their architectures to make AI integration possible.

At Edvantis, we’ve seen this transformation up close. Our team helped a Physical Asset Management provider modernize its legacy codebase and unlock the data needed for intelligent analytics. The project involved migrating decades-old code to newer solution versions, restructuring fragmented data pipelines, and implementing Bluetooth Low Energy (BLE) positioning algorithms to process tag data from industrial readers. Using Apache Flink and Java, we built advanced positioning algorithms that fused BLE and GPS inputs for more accurate real-time asset tracking. To handle the resulting data scale, our engineers designed a new AWS-based architecture capable of processing massive sensor data volumes efficiently — prioritizing scalability and cost-effectiveness.

Many leaders are also turning to Industrial DataOps, the fastest-growing segment in industrial software, expanding at nearly 49% CAGR through 2028. DataOps platforms help clean, contextualize, and orchestrate data flows for foundation model consumption. Vendors like Litmus Edge and Cognite Atlas AI are already embedding small language models and agentic AI workflows directly at the edge — a clear signal that the OT layer itself is evolving into a smarter, AI-ready substrate.

Long Model Validation Cycles

Naturally, the industrial manufacturing sector has a high bar for security. Extensive testing must be done before models can control or advise on safety-critical systems. Model validation cycles, on average, take 6-18 months because the stakes are high. A model with a high rate of false positives in maintenance or quality control can trigger unplanned downtime, recalls, and reputational losses. Regulatory compliance requirements further extend model deployment timelines.

Nonetheless, progress is happening. Audi, in partnership with Siemens, has pioneered a TÜV-certified virtual PLC solution — essentially moving the “brains” of factory machines into the cloud without compromising safety. The goal is to build a local cloud for production, shared by all the plants, to optimize standard manufacturing processes.

“We’re virtualizing these brains,” said Cedrik Neike, CEO of Siemens Digital Industries. “This accelerates digital transformation and increases agility, efficiency, and security in production.” It’s a slow evolution, but one that’s redefining what trustworthy automation can look like.

Data Security and IP Concerns

Manufacturers sit on sensitive data — design files, process parameters, supplier records — and few are comfortable letting open-source language models parse them. Even with private cloud instances, the fear of leaks or intellectual property exposure runs deep. The sector’s caution isn’t paranoia: manufacturing remains the world’s most targeted industry for cyberattacks.

The issue of data security often stems from weak AI governance. Only 17% of companies have AI governance frameworks in place, while most have massive blind spots in how data is collected, accessed, and used by AI agents.

To secure this new layer of automation, leaders should treat AI agents like any other critical asset:

- Register every AI copilot, assistant, or control agent in the OT inventory.

- Assign each deployment a unique identifier, access policy, and audit protocol.

- Implement strong authentication and role-based access controls before allowing connection to MES, ERP, or other historian systems.

- Deploy AI agents in segmented networks, keeping a strict air gap between assistive and control functions.

- Track every data source and maintain lineage metadata to ensure outputs remain explainable and auditable.

Emerging Use Cases of Foundation Models in Manufacturing

Industrial foundation models are yet to be as widely deployed as gen AI solutions for software engineering or marketing automation tools. But several pioneers in the industrial space have already run successful PoCs and now gun for large-scale roll-outs.

Below are four foundation model use cases in manufacturing that demonstrate how intelligent, context-aware AI can enhance design, production, maintenance, and risk management.

Generative Design

Generative design shows how foundation models can extend human creativity rather than replace it. Engineers no longer start from a blank page. Based on the reference database and provided criteria (e.g., component weight or desired sustainability characteristics), they can produce multiple design variants for evaluation. Each component prototype can be digitally validated before any physical equivalent gets manufactured.

A digital-first approach to design has already found grounds in the heavy industry. Claudius Peters, which produces equipment for cement and gypsum plants, partnered with Autodesk to introduce AI-driven generative design into its engineering workflows. The results were striking. Components designed by the model were up to sixty percent lighter without compromising durability. Even after retooling for conventional production methods, the final parts remained thirty percent lighter and significantly more cost-efficient.

CUPRA, the performance brand under Volkswagen, also launched a successful generative design pilot. With PTC Creo, its teams now create and test three-dimensional prototypes in a fraction of the time it once took. The company reports a ten percent weight reduction across several components and a fifteen percent drop in material costs.

Effectively, generative design turns engineering into a partnership between human intuition and machine reasoning. The foundation model does not dictate the answer. But rather it helps explore the pane of what might be possible and choose the optimal approach.

Vision-Driven Quality Control

Quality control is often a balancing act between precision and speed. Foundation vision models — pre-trained to understand images across diverse industrial contexts — are changing that equation. New techniques like FastVLM enable low-latency processing of high-resolution images, along with efficient visual query processing, making it suitable for powering real-time applications on-device. For example, visual quality control over a fast-moving assembly line. With ongoing access to real and synthetic training data, the algorithms continue to learn long after the initial deployment.

Pegatron, a major Taiwanese electronics manufacturer, has already seen the payoff. The company architected an AI-driven inspection system, based on NVIDIA’s Omniverse Replicator, Isaac Sim, and Metropolis frameworks. The agent trains on both authentic and synthetic imagery, allowing it to reach nearly perfect accuracy in detecting manufacturing defects.

After deploying an AI-augmented assembly process, Pegatron measured:

- 4X increase in inspection throughput

- 7% reduction in labor costs per assembly line

- 67% decrease in defect rates

Audi is pursuing a similar vision with Siemens technology. Its weld inspection system, powered by Siemens’ Application AI Inference Server, analyzes car body joints in real time. It can flag irregularities instantly, improving consistency and reducing costly rework. The quality control agent seamlessly integrates with existing IT and OT systems, resulting in faster feedback loops.

Maintenance Copilots

The best technicians know their machines by feel, but that expertise is often trapped in decades of manuals, logs, and personal experience. Foundation models can be used to aid knowledge dissemination and training. The new generation of “maintenance copilots” can summarize system histories, interpret sensor data, and suggest next steps when issues arise. They learn from every intervention and grow smarter with use.

Siemens has developed a suite of Industrial Copilots, with one of them fine-tuned for maintenance. It supports the full maintenance cycle, from fault detection to predictive performance optimization. The copilot helps diagnose the malfunction and investigate root causes, plus provides advice for preventing future breakdowns. Early pilots show a 25% average reduction in reactive maintenance time.

Rolls-Royce is among the early adopters of Siemens’ technology. The team recently integrated Siemens’ digital thread technology and Insight Hub, a generative AI copilot that interprets telemetry data in real time. The copilot can translate error codes into plain-language explanations and recommend the most effective corrective actions. Engineers can also use it to generate custom OEE dashboards that reveal patterns across multiple production lines.

Maintenance copilots represent a quiet revolution. They turn decades of fragmented data into living intelligence that guides people through complex, high-stakes work with speed and confidence.

Risk Intelligence

As disruption has become “business as usual”, leaders seek extra foresight. Foundation models can give manufacturers a new kind of visibility: the ability to predict risk long before it materializes. AI agents can scan for signals across multiple data sources — supplier updates, ESG reports, news feeds, and operational data — and connect them into an evolving map of exposure and resilience.

Prewave is one of the pioneers in this space. The company developed an AI-driven

system for tracking over 140 categories of global risks — from natural disasters and financial distress to cyber attacks and compliance violations. The algorithm parses multilingual data streams and flags potential disruptions in real time. Early adopters report a 40% reduction in manual monitoring workloads, freeing compliance teams to focus on proactive mitigation rather than data triage.

Tradeverifyd has taken a complementary approach. By integrating OpenCorporates’ global company intelligence, it built an AI risk engine that maps supplier ownership structures and compliance histories. The platform’s model produces a dynamic resilience score for each vendor, helping companies spot hidden dependencies before they become bottlenecks. The integration of external data sources simplified development and accelerated go-to-market by six months, a significant edge in a space where speed of insight often defines competitiveness.

By deploying AI solutions across the supply chain, leaders can switch from reactive response — often leading to delays and elevated operating costs — to a more proactive strategy. Combined with leaner business processes, predictive risk intelligence can help shape a learner business model that can absorb temporary market shocks.

Conclusion

Industrial foundation models are already proving their value in the physical world. They connect disparate data sources — design data, sensor signals, and production insights — into a continuous stream of intelligence. This unlocks faster iteration, leaner processes, and a sharper ability to predict and adapt to change.

But the real unlock happens before deployment. Industrial AI models require the right target environment, which includes a scalable system architecture, real-time data pipelines, and a responsible governance layer. Getting those elements right is what separates pilots from production-grade success.

At Edvantis, we help industrial teams lay that groundwork. From modernizing legacy data systems to building scalable AI pipelines on AWS, our software engineering team helps you implement industrial AI without compliance or security risks. Contact us for more information about our services.